AI Security Breaches: 2025’s Lessons for Every Organization

AI Security Breaches: 2025’s Lessons for Every Organization

AI-driven apps and tools now power everything from healthcare to logistics, but as their use accelerates, so do the risks. The latest breach reports show alarming gaps in AI security—and growing costs that organizations can’t ignore.

The Scale of the Issue

In 2025, 97% of AI apps were found lacking basic security controls. Shadow AI and rogue agents account for over 20% of global breaches, with median costs to businesses topping $670,000 per incident. Breaches often occur through compromised APIs, third-party integrations, and poorly governed chatbot deployments.

Real-World Examples

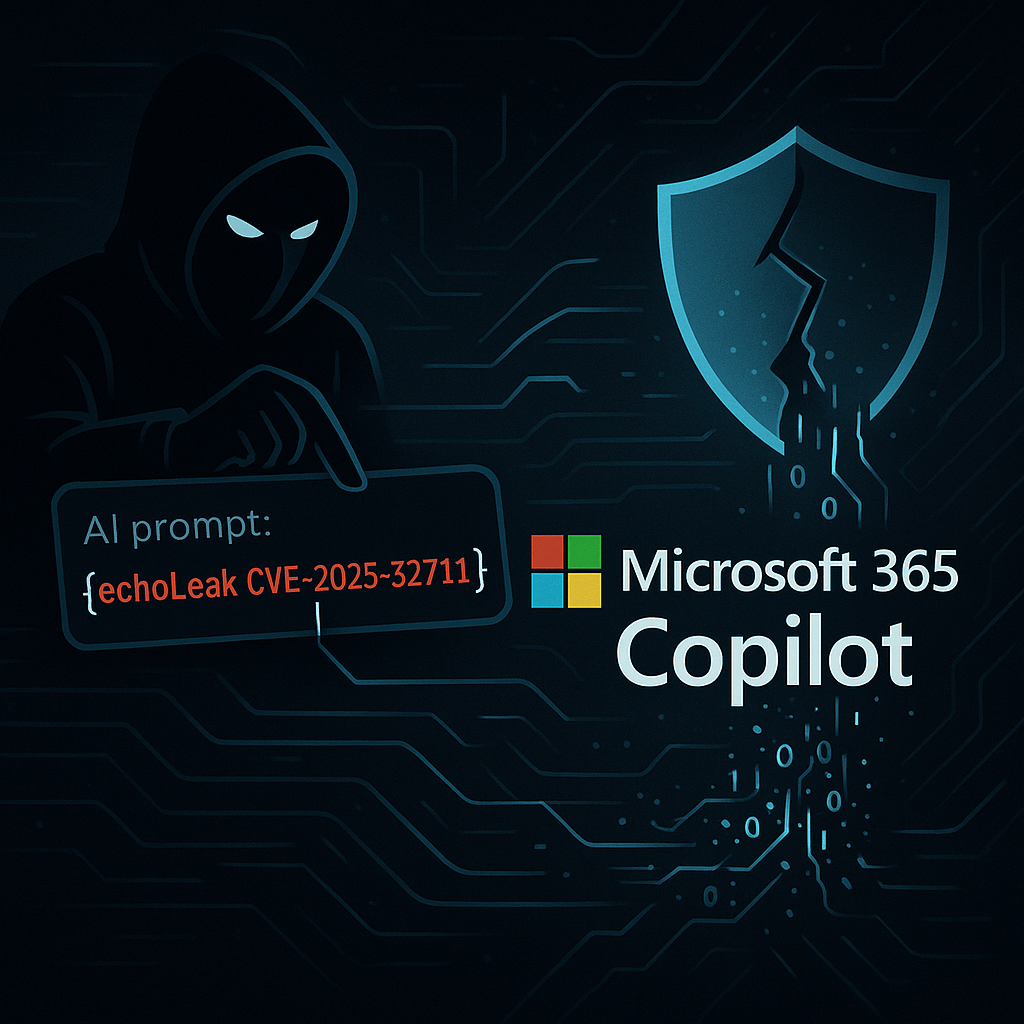

Major brands have faced AI breaches, including sensitive data leaks via generative AI tools, prompt injection exploits, and supply chain vulnerabilities. These incidents highlight the importance of monitoring both human and machine identities—and automating compliance wherever possible.

Proactive Response

Key steps for resilience:

- Establish governance and clear AI usage policies.

- Monitor all AI identities, usage, and access.

- Deploy automated security testing on AI-powered software.

AI makes business more efficient, but without robust safeguards, it can also escalate risks. Leaders must prioritize continuous monitoring and sound governance to stay ahead.

Responses