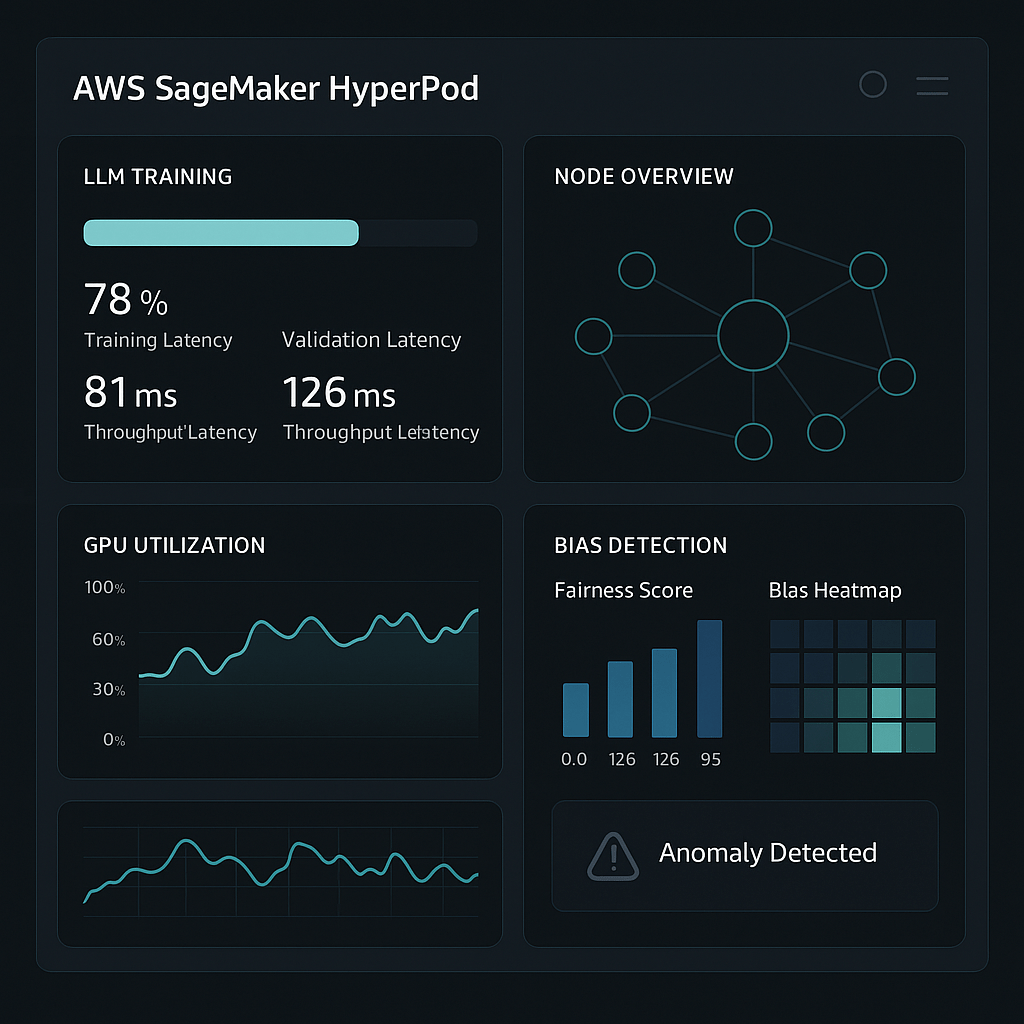

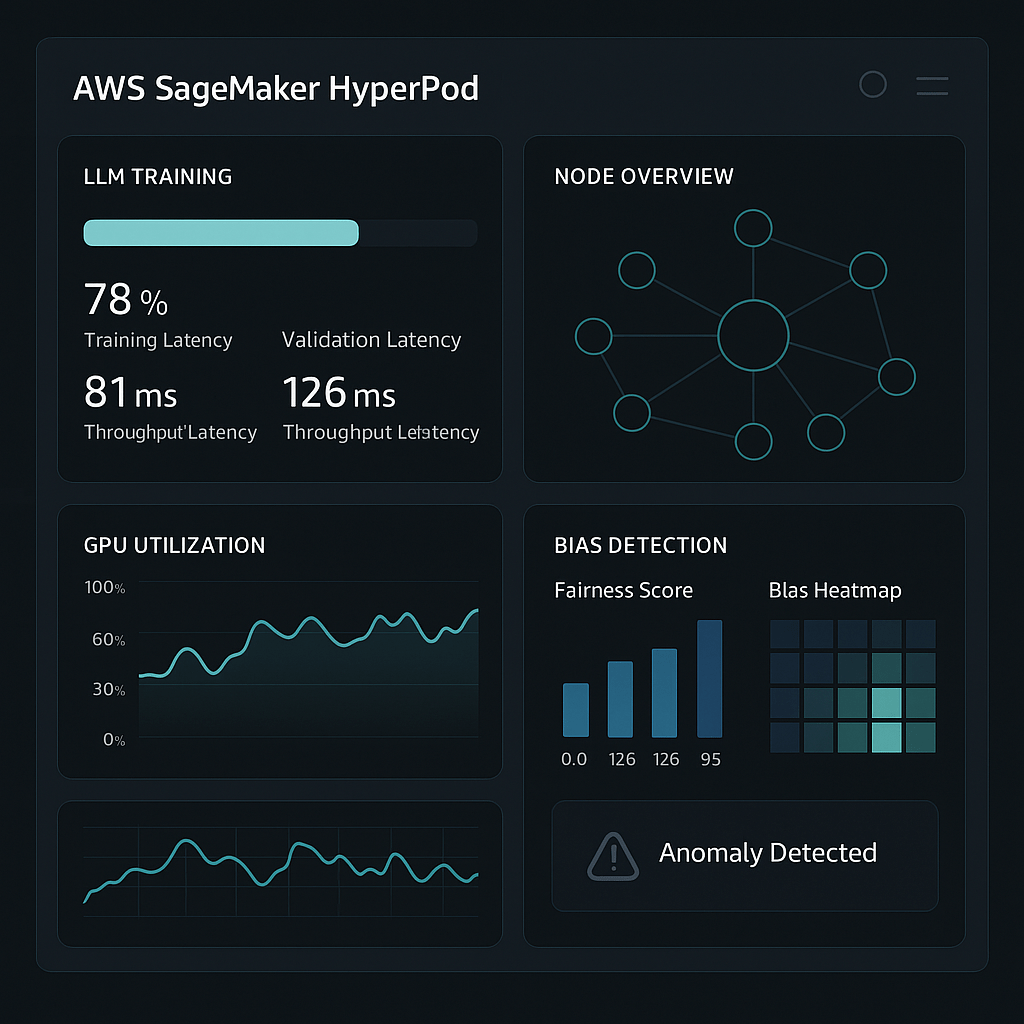

Accelerate Distributed LLM Training and Bias Detection with SageMaker HyperPod

Accelerate Distributed LLM Training and Bias Detection with SageMaker HyperPod

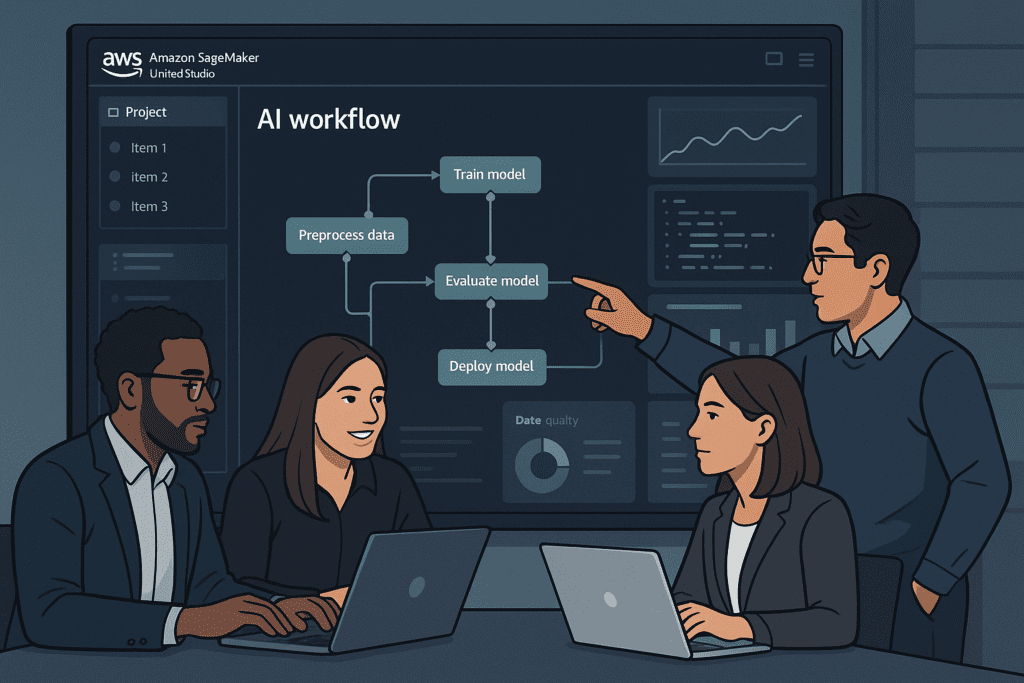

The rapid growth of large language models (LLMs) is reshaping how teams approach enterprise AI—but training these models reliably and responsibly presents new engineering and ethical challenges. SageMaker HyperPod is AWS’s purpose-built infrastructure for training and deploying foundational models at scale, removing heavy operational overhead while introducing advanced control and observability for ML teams.

What Makes HyperPod Different?

- Distributed Training at Scale: HyperPod builds persistent, fault-tolerant clusters using hundreds or thousands of AI accelerators (such as Trainium or Nvidia H100) to execute data- and model-parallel training efficiently. Teams can leverage integrated orchestration via SLURM and Elastic Kubernetes Service (EKS), easily scaling their workloads across robust infrastructure with up to 40% faster training cycles than traditional methods.

- Self-Healing & Resilient: Automatic hardware failure recovery and checkpointing allow ML teams to pause, resume, and optimize long-running training jobs—saving weeks or months during model development. This architecture ensures uninterrupted training even if some GPU nodes experience downtime.

- Customizable Environments: Enterprises gain deep infrastructure control, including custom Amazon Machine Images and policy enforcement, aligning clusters with organizational standards for security and compliance.

Why Bias Detection Matters

Training LLMs at scale is just the first step. SageMaker Clarify, tightly integrated with HyperPod workflows, enables continual bias monitoring at three critical stages:

- Pre-training: Detect and address imbalances in training data (e.g., age, gender, or other features) using visual reports and proven data balancing techniques.

- Post-training: Analyze model predictions for unfair bias across subgroups, guiding hyperparameter tuning to optimize for fairness and equity.

- Deployment Monitoring: Automated threshold alerts catch emerging bias in production models as live data shifts, helping teams remediate issues proactively within SageMaker Studio or CloudWatch.

Real-World Impact

Major tech and research organizations—including startups and enterprises—have used HyperPod to accelerate the development of advanced models (e.g., Falcon 180B, Nova), achieving substantial cost savings, reliability, and fairness oversight. The growing intersection of distributed training and responsible AI puts HyperPod and Clarify at the heart of industry best practices.

If your organization is architecting LLM solutions, consider not just infrastructure scale but also robust bias detection as a key pillar for responsible enterprise AI.

Responses