Improved Security with AI: 2025’s Critical Lessons and Best Practices

Improved Security with AI: 2025’s Critical Lessons and Best Practices

Artificial intelligence is transforming security for organizations around the world—but with evolution comes new risks and responsibilities. In 2025, the threat landscape is more complex than ever, as AI systems become frequent targets and tools for sophisticated cyberattacks.

How AI Is Changing the Security Threat Landscape

AI models, now integral to business, healthcare, and government systems, process vast amounts of sensitive data. This visibility—combined with machine-driven automation—has lowered the barrier for adversaries. Cybercriminals now exploit advanced AI for attacks, fraud, and data manipulation at a scale never seen before.

Key vulnerabilities include:

- Adversarial Inputs: Maliciously crafted data can force AI systems to malfunction, reveal internal logic, or leak secrets.

- Data Poisoning: Attackers taint training datasets, corrupting entire machine learning pipelines and skewing outcomes.

- Model Inversion and Extraction: Threat actors reverse-engineer models to steal intellectual property or infer private training data.

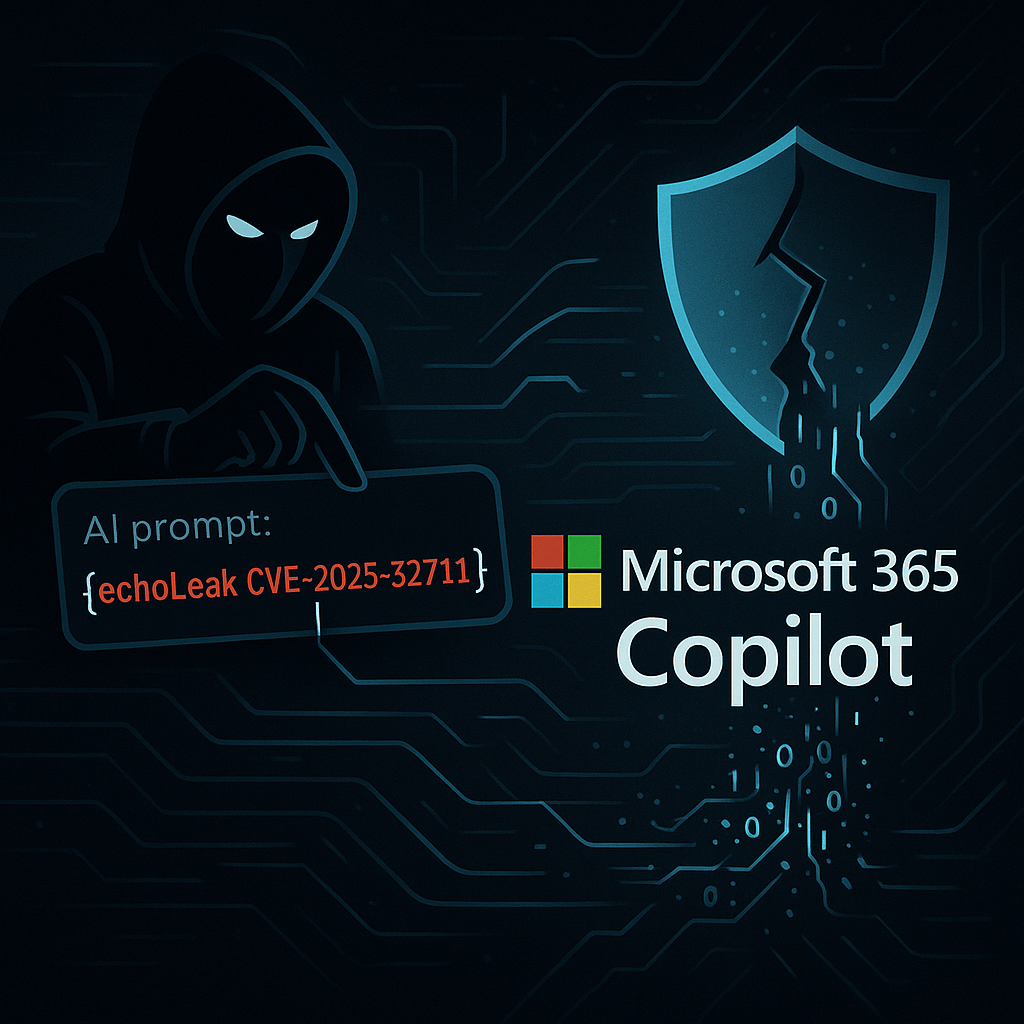

- Prompt Injection Attacks: Malicious queries can circumvent controls in generative and conversational AI models, leaking answers or data.

Recent AI-Powered Security Breaches

2025 has already seen multiple major incidents:

- AI-generated ransomware used for extortion, with automated reconnaissance and smart ransom demands.

- Supply chain attacks leveraging AI-written malware and adversarial AI to bypass detection.

- Fraudulent employment schemes where foreign actors used AI to fake credentials and access sensitive enterprise environments.

These examples highlight that even organizations with advanced cyber defenses are vulnerable unless they prioritize new AI-driven security controls.

Best Practices for Improving AI Security

- Adversarial Training and Testing: Regularly expose models to adversarial data and perform penetration tests tailored to AI workflows. This builds resilience against manipulation, drift, and malicious input.

- Continuous Monitoring: Use active telemetry and anomaly detection to monitor for weird outputs, suspicious behavior, and unauthorized data access—before it turns into a breach.

- Role-Based Access Controls: Restrict access to models and training data with multi-factor authentication, cryptographic protections, and regular audits.

- Governance and Documentation: Integrate AI risk management into broader security strategies, documenting data sources, approval processes, and model lifecycle events.

- Automated Response and Patch Management: Update detection systems and incident response plans for emerging AI threats, preparing for real-time containment and recovery.

Looking Ahead: Security Leaders Must Adapt

AI will continue to reshape both offense and defense in cybersecurity. Leaders must invest in robust controls, audit their digital supply chains, and incorporate AI awareness into culture and policy. Regulatory bodies are also stepping up oversight, demanding transparency in how AI systems are built, operated, and secured.

With threats and solutions evolving daily, the path forward is clear: embrace innovation, but keep security as the foundation. Improved security with AI means ongoing diligence, collaboration across teams, and a willingness to learn from every new challenge.

Responses