MLOps Security Nightmare: 5 Critical Vulnerabilities Putting Your AI Startup at Risk

MLOps Security Nightmare: 5 Critical Vulnerabilities Putting Your AI Startup at Risk 🚨

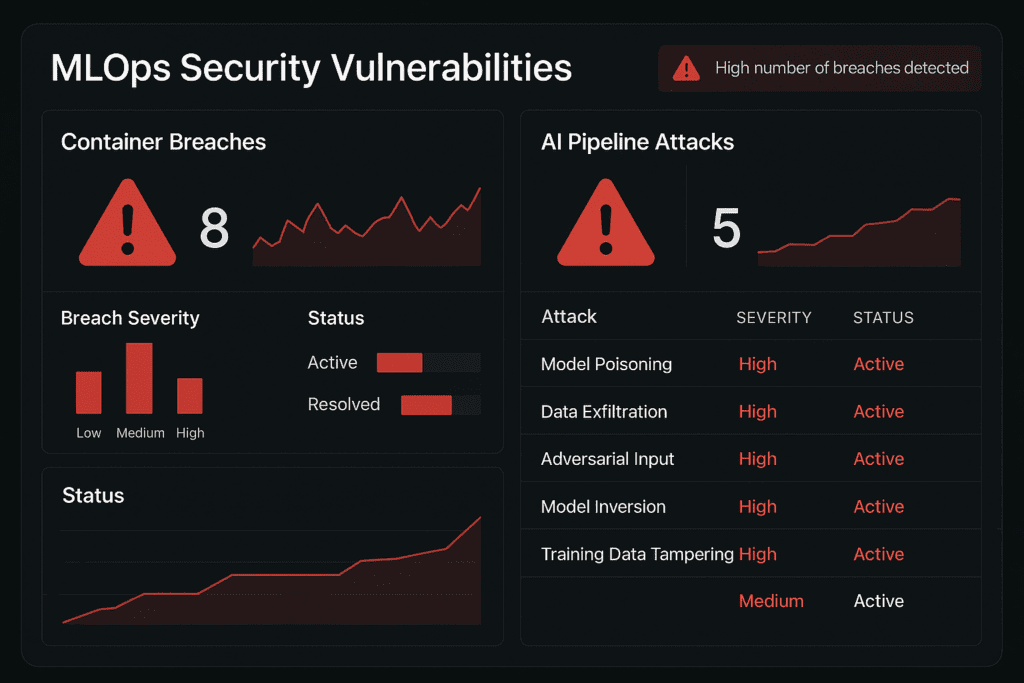

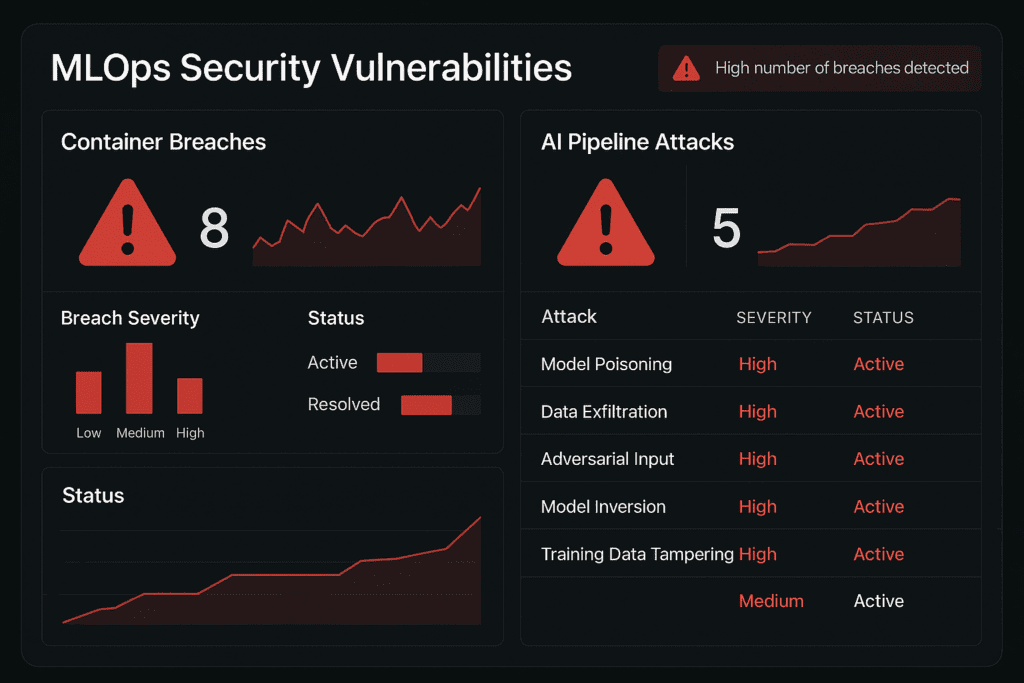

Stop everything and check your stack NOW. Security researchers just discovered 20+ vulnerabilities across popular MLOps platforms, with attackers actively exploiting AI pipelines to hijack servers, steal models, and poison datasets.

The Alarming Reality: You’re Already Compromised

78% of exposed container registries have overly permissive access controls, and over 8,000 AI model registries are sitting wide open on the internet. If you’re using popular ML tools like Weave, ZenML, or Deep Lake, you’re likely vulnerable to:

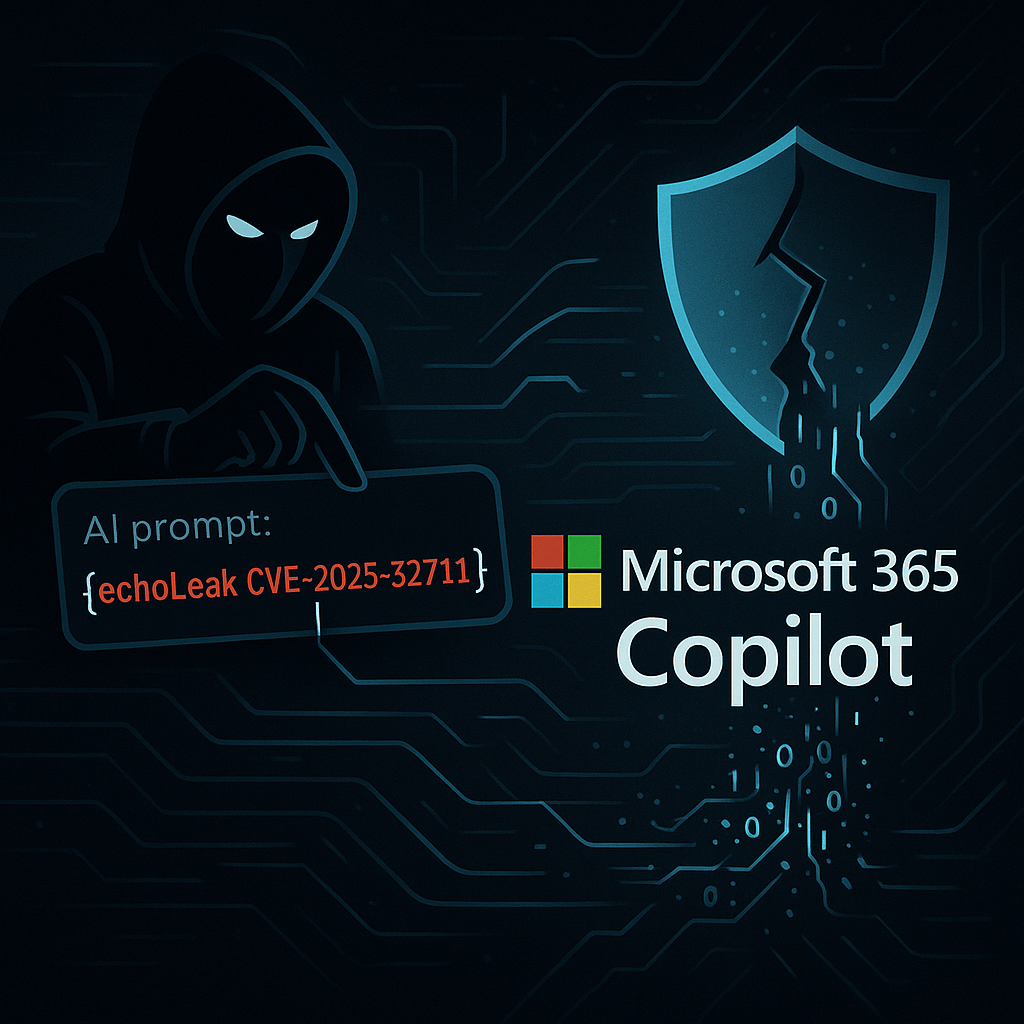

- Model Registry Hijacking – CVE-2024-7340 lets low-privilege users escalate to admin by reading a simple file

- Container Escape Attacks – Malicious models can break containment and access your entire infrastructure

- Supply Chain Poisoning – Attackers inject backdoors into CI/CD pipelines, compromising every downstream deployment

- Shadow AI Exploitation – Unauthorized AI tools create $670K breach exposure points

- Pipeline Takeover – Direct access to ML databases, training data, and production models

Why Small AI Companies Are Prime Targets

Your startup advantages are your biggest vulnerabilities:

- Rapid deployment cycles skip security reviews

- Small teams lack dedicated security expertise

- Popular open-source tools have zero-day exploits

- Container-based architectures create massive attack surfaces

The Real Cost? Company Death

One successful attack can:

- Steal your entire model library and training datasets

- Inject backdoors into production AI systems

- Compromise customer data through poisoned predictions

- Trigger regulatory penalties and customer abandonment

Immediate Action Required

This week, audit these 5 critical areas:

- Container registry permissions and access logs

- ML pipeline authentication and authorization

- Model artifact integrity and provenance

- Third-party AI tool inventory and governance

- Incident response plans for AI-specific breaches

The attackers aren’t waiting for your security roadmap to mature. Every day of delay increases your exposure.

Responses