Why Your Developers Are Creating Security Nightmares with AI

Why Your Developers Are Creating Security Nightmares with AI

Shocking truth: 45% of AI-generated code contains security vulnerabilities, yet 76% of technology workers mistakenly believe AI code is more secure than human-written code.

Here’s what’s happening in development teams right now:

The Trust Paradox: Developers under pressure blindly integrate AI-generated code without thorough review. Research shows that in 80% of tasks, developers using AI tools produced less secure code than those coding traditionally – yet they were 3.5 times more likely to think their code was actually secure.

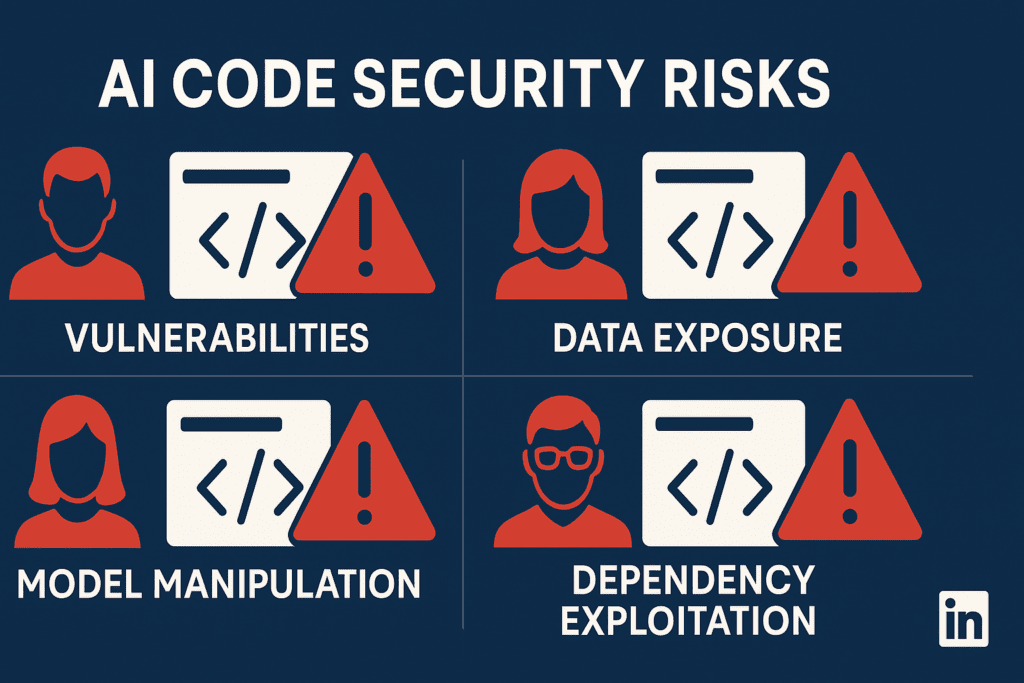

Common Vulnerabilities Being Introduced:

- Cross-site scripting vulnerabilities in 86% of AI-generated web code

- Log injection flaws in 88% of cases

- Hardcoded secrets and API keys embedded directly in source code

- Insecure dependencies and outdated libraries without security vetting

Why AI Fails at Security: AI models learn from publicly available code repositories, many containing vulnerabilities. They lack contextual understanding of security requirements and can’t perform the complex analysis needed for secure coding decisions.

The Volume Problem: A single AI agent can generate hundreds of potentially vulnerable code snippets daily. Traditional code review processes weren’t built for this volume, leaving security teams overwhelmed.

The scariest part? These vulnerabilities hide well – syntax errors break compilation immediately, but security flaws let code run normally while opening attack paths.

At Rivia, we help development teams implement secure AI coding practices and automated security scanning that catches these issues before they reach production. Don’t let AI speed become your security weakness.

Responses